搞大创的时候本来说要自己做一个数据集,写了一个人脸图片数据集制作的脚本,结果之后开会选择了另外一个方案,数据集也不用自己准备了,这东西在我们的项目就啥用没有了。

但我还是想把它给记录一下,这是我第一次自己使用这种API服务,真的惊叹于它的速度和准确率,比什么爬虫快多了,质量也高多了,真的是性能的力量。

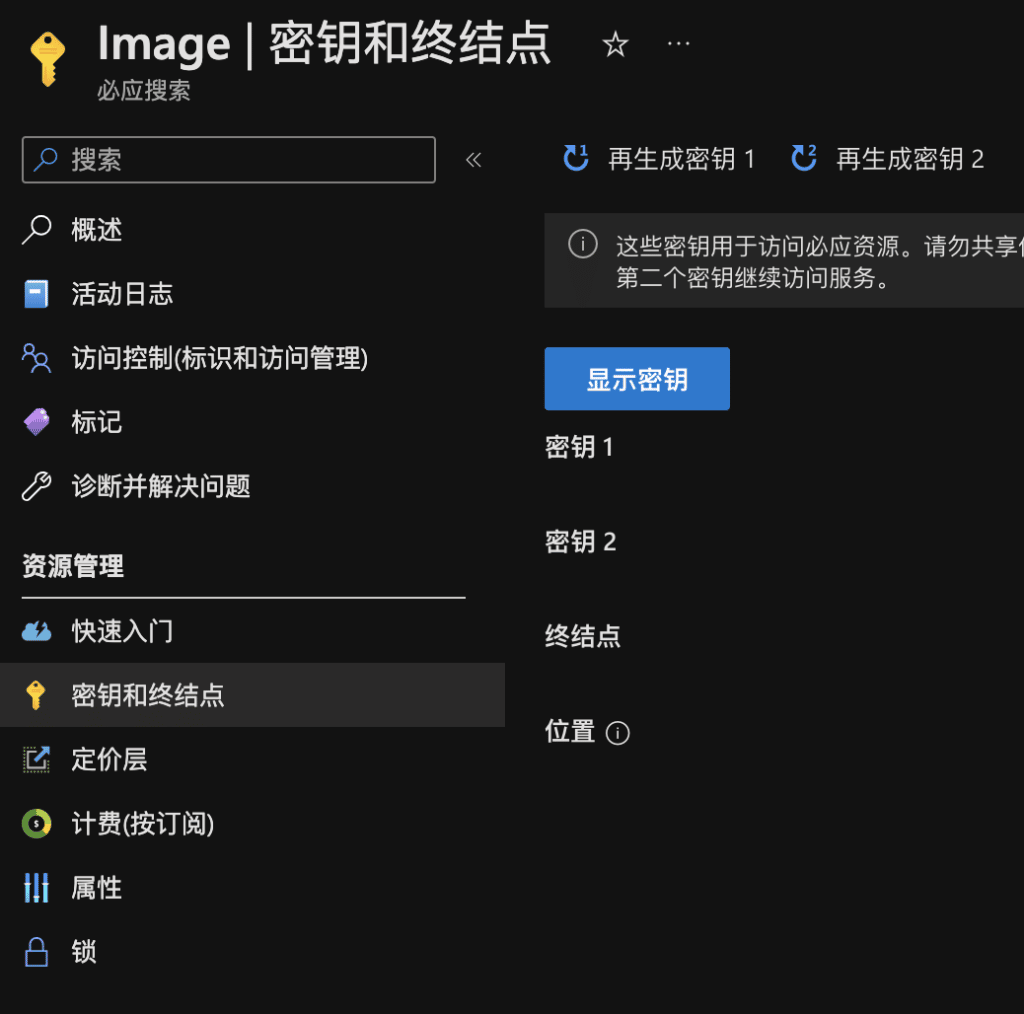

这里用到的是 bing 的图片搜索API,可以在 Microsoft Azure 用学生认证申请1000调用/月的资源,获得密钥后填入下面脚本里的 API_KEY。

从这里进入申请并创建资源 https://www.microsoft.com/en-us/bing/apis/bing-image-search-api

# -*- coding: utf-8 -*-

# @Author : XFishalways

# @Time : 2022/9/20 12:56 AM

# @Function: Generate bing image search API to create a celebrity dataset

# import the necessary packages

from requests import exceptions

import argparse

import requests

import cv2

import os

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-q", "--query", required=True,

help="search query to search Bing Image API for")

ap.add_argument("-o", "--output", required=True,

help="path to output directory of images")

args = vars(ap.parse_args())

# set your Microsoft Cognitive Services API key along with (1) the

# maximum number of results for a given search and (2) the group size

# for results (maximum of 3 per request)

API_KEY = "2e80ad1dd6fd48acb2f07a241877d590"

MAX_RESULTS = 6

GROUP_SIZE = 3

# set the endpoint API URL

URL = "https://api.bing.microsoft.com/v7.0/images/search"

# when attempting to download images from the web both the Python

# programming language and the requests' library have a number of

# exceptions that can be thrown so let's build a list of them now,

# so we can filter on them

EXCEPTIONS = {IOError, FileNotFoundError, exceptions.RequestException, exceptions.HTTPError, exceptions.ConnectionError,

exceptions.Timeout}

# store the search term in a convenience variable then set the

# headers and search parameters

term = args["query"]

headers = {"Ocp-Apim-Subscription-Key": API_KEY}

params = {"q": term, "offset": 0, "count": GROUP_SIZE}

# make the search

print("[INFO] searching Bing API for '{}'".format(term))

search = requests.get(URL, headers=headers, params=params)

search.raise_for_status()

# grab the results from the search, including the total number of

# estimated results returned by the Bing API

results = search.json()

estNumResults = min(results["totalEstimatedMatches"], MAX_RESULTS)

print("[INFO] {} total results for '{}'".format(estNumResults,

term))

# initialize the total number of images downloaded thus far

total = 0

# loop over the estimated number of results in `GROUP_SIZE` groups

for offset in range(0, estNumResults, GROUP_SIZE):

# update the search parameters using the current offset, then

# make the request to fetch the results

print("[INFO] making request for group {}-{} of {}...".format(

offset, offset + GROUP_SIZE, estNumResults))

params["offset"] = offset

search = requests.get(URL, headers=headers, params=params)

search.raise_for_status()

results = search.json()

print("[INFO] saving images for group {}-{} of {}...".format(

offset, offset + GROUP_SIZE, estNumResults))

# loop over the results

for v in results["value"]:

# try to download the image

try:

# make a request to download the image

print("[INFO] fetching: {}".format(v["contentUrl"]))

r = requests.get(v["contentUrl"], timeout=30)

# build the path to the output image

ext = v["contentUrl"][v["contentUrl"].rfind("."):]

p = os.path.sep.join([args["output"], "{}{}".format(

str(total).zfill(8), ext)])

# write the image to disk

f = open(p, "wb")

f.write(r.content)

f.close()

# catch any errors that would be not unable us to download the

# image

except Exception as e:

# check to see if our exception is in our list of

# exceptions to check for

if type(e) in EXCEPTIONS:

print("[INFO] skipping: {}".format(v["contentUrl"]))

continue

# try to load the image from disk

image = cv2.imread(p)

# if the image is `None` then we could not properly load the

# image from disk (so it should be ignored)

if image is None:

print("[INFO] deleting: {}".format(p))

os.remove(p)

continue

# update the counter

total += 1先要配置好 OpenCV 环境,在 mac 里还是很好弄的,brew install 就行。

MAX_RESULTS 和 GROUP_SIZE 都是可以自己调整的

整体思路大概是按批次循环发送请求,接收 json 数据,进行图片的读取写入,再同时对每组的写入的图片使用 opencv 检查图片是否能够加载出来,如果不能就删除且保持 total 计数器不变重新开始循环再次发送请求,如果可以则代表本次成功,total+=1。

另外要注意的一点是要先列出可能发生的异常,在处理 json 时套上 try catch,异常可能包括:

- 路径异常 => 文件读取问题

- 请求异常 => 响应 or 返回数据问题

- 网络异常 => 连接问题

运行就是通过命令行,query 和 output 两个参数,分别代表搜索内容和输出路径,输出路径要先创建好。

python3 search_bing_api.py --query "keyword" --output dataset/keyword这里的路径当然是相对于 python 脚本的根目录的,上面的例子中 dataset 文件夹是和脚本文件同级的。

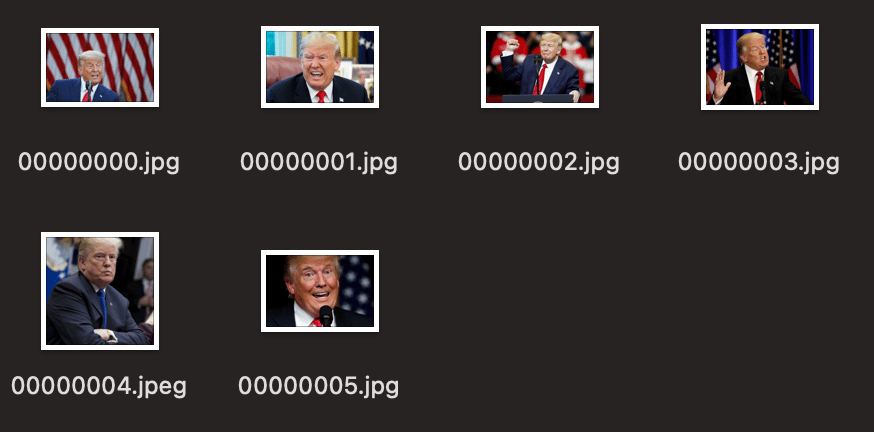

总的来说,体验还是很不错的,我测试了一下几十张图片基本一下子就搞定,一点不卡,而且质量很高,人脸图片全部准确无误,还包含各种表情和角度,虽然目前还没什么能用到的地方,但如果之后做一些需要特定类型图片数据集的,不仅限于人脸,各种动物或者物体也可以,这个就感觉会很实用很高效。

参考文档:

https://learn.microsoft.com/en-us/azure/cognitive-services/bing-image-search/quickstarts/python

https://cloud.tencent.com/developer/article/1109410?utm_source=pocket_mylist